Enabling Developer Autonomy with Terraform Platform Engineering

With the growing complexity of software development and business requirements, the autonomy and efficiency of development teams has taken a hit. Internal Developer Platforms (IDP) are a growing trend which attempt to solve this by removing the disconnect between developers and the infrastructure they deploy. Platform Engineering is the emerging discipline of building and managing these IDPs using Infrastructure-as-Code (IaC). An IDP is set up by platform engineers to create reproducable environments that can be requested by developers, typically through a developer portal. By using an IDP developers can change configurations and deploy their own templated environments without having to "throw it over the fence" to the platform team.

The latest reporting from Gartner predicts that platform engineering will be present in 80% of large software engineering companies by 2026. IDPs have a multitude of benefits for development teams, such as reducing cognitive load, automating repetitive work, and having consistent, standardized tooling. However, these gains don't come for free. There is inherent complexity involved in building these platforms.

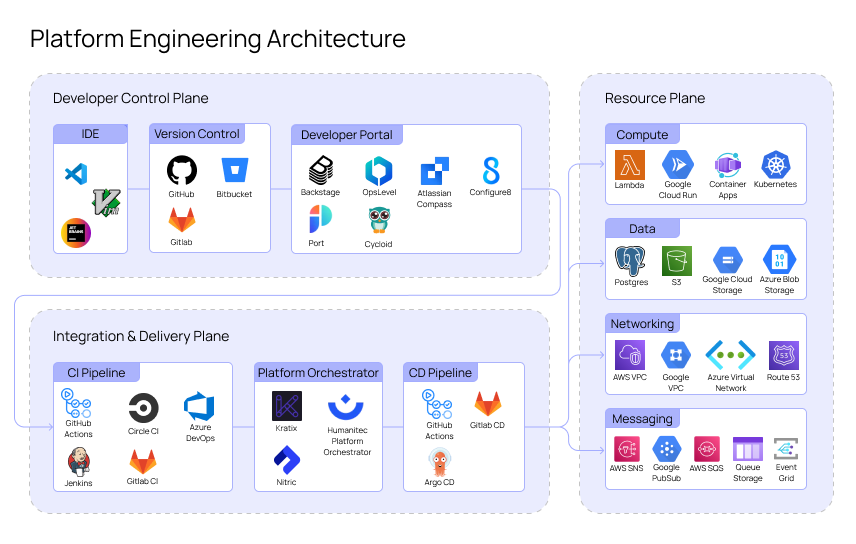

Platform Engineering Architecture

IDPs are commonly split into three components. These components are referred to as the developer control plane, the integration and delivery plane, and the resource plane. You can layer in more planes based on your requirements, frequently these additional planes are monitoring and security based. In essence these abstract planes categorize relevant technologies.

Developer Control Plane

The developer control plane groups tools an application developer interacts with to request their deployed infrastructure. A general workflow for a developer using an IDP would involve writing their application in an IDE, pushing the application to a version control repository, and requesting an environment on the developer portal. Therefore, this plane includes the IDE, version control, application source code, and the developer portal.

Integration & Delivery Plane

The integration and delivery plane is concerned with orchestrating the resources to deploy. Like the name implies, the integration and delivery plane is normally integrated using a CI/CD pipeline. This is generally connected to a version control system to enable developers to make changes without having to contact the platform team. The key component of this plane is the platform orchestrator which takes the application code and converts it into a list of resources that can be deployed. A lot of IDPs will work using an explicit manifest which will map to certain infrastructure modules that a platform team has built. The manifest is logged in a version control system providing governance and monitoring on how the application infrastructure has grown over time. This might look like so:

version: dev/0.0.1name: example-manifestcontainers:example-service:image: exampleenv:- CONNECTION_STRING: postgresql://user:pass@localhost:5432/dbresources:db:type: postgresstorage:type: s3

A platform orchestrator might take this manifest and see that its requesting three distinct resources, a container, a postgres database, and an s3 bucket. The platform team doesn't need to manually provision and coordinate these resources as they would have already created a standard set of modules that have been tested and approved. This means that there's no lost context by passing the required infrastructure specification across teams, giving development teams true self-service infrastructure.

Resource Plane

The resource plane contains the resources themselves, including compute, data management, networking, and messaging services. This is the part of the platform where the application is running.

Using Terraform for Platform Engineering

Terraform is an excellent option for IaC tooling with platform engineering due to the modularity that is built into the core framework. This can be used in conjunction with a platform orchestration tool like Nitric. Nitric takes application code and converts it into an infrastructure specification that can be used with Terraform modules.

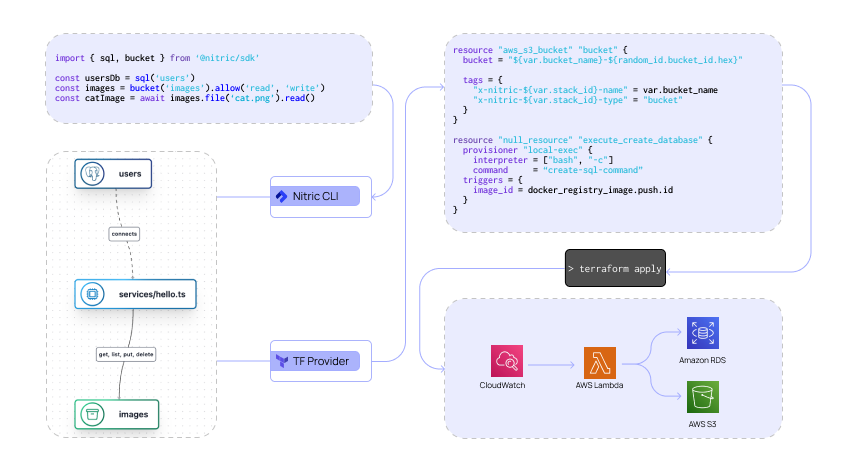

The difference in Nitric's approach is that it skips the manifest completely, inferring your infrastructure from your code. For example, an application defining a postgres database, S3 bucket, and container service would look like this using Nitric:

import { sql, bucket } from '@nitric/sdk'const usersDb = sql('users')const images = bucket('images').allow('read', 'write')const catImage = await images.file('cat.png').read()

The orchestrator would then take this code and convert it into the following infrastructure specification. You will notice that the container is inferred to be required, as is the security policy for the bucket.

{"resources": [{"id": {"type": "SqlDatabase","name": "users"},"sqlDatabase": {}},{"id": {"type": "Bucket","name": "images"},"bucket": {}},{"id": {"type": "Policy","name": "b38cd6b688241461c4f6a8344601ba95"},"policy": {"principals": [{"id": {"type": "Service","name": "summer-breeze_services-hello"}}],"actions": ["BucketFileList", "BucketFileGet", "BucketFilePut"],"resources": [{"id": {"type": "Bucket","name": "images"}}]}},{"id": {"type": "Service","name": "summer-breeze_services-hello"},"service": {"image": {"uri": "summer-breeze_services-hello"},"type": "default"}}]}

This, using Nitric, will be sent to the deployment engine to link each resource to the specific Terraform modules that have been defined for each use case. Using Terraform's module system, you can break it down into deployment code, input and output variables. The following code is an example of a bucket module that could be used to deploy the Nitric bucket resource:

# main.tfresource "aws_s3_bucket" "bucket" {bucket = "${var.bucket_name}-${random_id.bucket_id.hex}"tags = {"x-nitric-${var.stack_id}-name" = var.bucket_name"x-nitric-${var.stack_id}-type" = "bucket"}}

# outputs.tfoutput "bucket_arn" {description = "The ARN of the deployed bucket"value = aws_s3_bucket.bucket.arn}

# variables.tfvariable "bucket_name" {description = "The name of the bucket. This must be globally unique."type = string}variable "stack_id" {description = "The ID of the Nitric stack"type = string}

Why this approach works

This approach to platform engineering gives application developers the freedom to write the application code without the requirement of writing separate manifests. It also keeps the deployment and runtime code together which maintains context, improving developer efficiency. It also gives platform engineers the peace of mind that the application team can only deploy infrastructure that has been standardized by the platform team.

Checkout the latest posts

Why I joined Nitric

Why I left the corporate world to join Nitric

Build Azure Infrastructure Using AI and Terraform

Learn how to leverage AI and Terraform to build and deploy Azure infrastructure directly from your application code with Nitric.

GenAI Made Terraform More Relevant Than Ever

Infrastructure as Code is more alive than ever, but it is no longer something teams should write line by line.

Get the most out of Nitric

Ship your first app faster with Next-gen infrastructure automation