Adaptable software and architecture patterns are essential for scaling systems reliably. They allow us to impart knowledge about building resilient and scalable systems, tailored for specific use cases.

In the past these patterns were often expressed entirely in application code due to the centralized nature of traditional systems. However, applying these patterns in the cloud requires intertwining application code with infrastructure code (IaC).

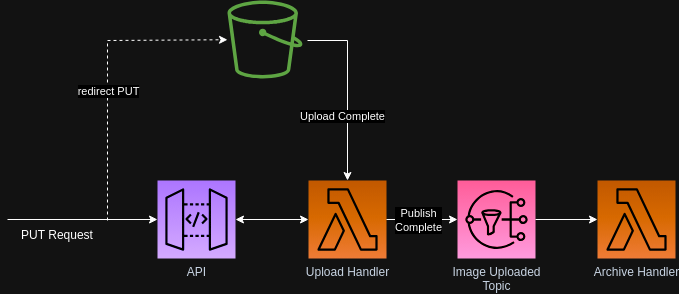

Let's illustrate with an example: an app that facilitates image uploads to a bucket via an API. After uploading, lambda functions are triggered to process these files, storing their results in new buckets.

Designing such an application with IaC mandates a proactive stance where infrastructure blueprints must be curated beforehand. Subsequently, the application code needs to be written specifically to integrate with the decided infrastructure. Although both application and infrastructure are distinct, their interdependence is evident: high coupling with low cohesion.

This dichotomy, coupled with a proactive design approach, makes pattern replication challenging. Ideally, Platform Engineering should bridge this gap. However, prevailing beliefs around self-service infrastructure indicate that the cohesion between applications and infrastructure remains weak. Maintaining both application and infrastructure code as separate entities is burdensome. As a result, many teams revert to deploying monolithic applications, given the complexity of crafting distributed cloud-optimized solutions.

What if, as an alternative, we bring cloud primitives into the application layer, making applications inherently cloud-aware? This new approach restores our ability to encapsulate cloud applications in software, re-establishing repeatable pattern expression exclusively in software. The result promotes pattern-sharing through reusable software libraries.

For instance, using the Nitric framework, we can extract the aforementioned pattern into a reusable library:

// lib/blob-processor.tsimport { File, bucket, faas, topic } from '@nitric/sdk'import short from 'short-uuid'interface FileEvent {key: string}export default (name: string) => {const blobBucket = bucket(name)const eventFanout = topic<FileEvent>(`${name}-fanout`)return {// register the handler for picking up bucket eventsstartListener: () => {const eventFanoutPub = eventFanout.for('publishing')blobBucket.on('write', '*', async (ctx) => {await eventFanoutPub.publish({key: ctx.req.key,})})},uploadFileMiddleware: () => {const operableBucket = blobBucket.for('writing')return async (ctx: faas.HttpContext, next: faas.HttpMiddleware) => {const name = short.generate()const uploadUrl = await operableBucket.file(`uploads/${name}`).getUploadUrl()ctx.res.headers['Location'] = [uploadUrl]ctx.res.status = 307return next ? next(ctx) : ctx}},// register a worker for upload eventsworker: (handler: (file: Pick<File, 'read' | 'name'>) => Promise<void>) => {const readableBucket = blobBucket.for('reading')eventFanout.subscribe(async (ctx) => {await handler(readableBucket.file(ctx.req.json().payload.key))})},// Allow access to the underlying bucketresource: blobBucket,}}

In this example, we're defining a new resource named blobProcessor. It includes hooks for applications to act as the upload facilitator or as a worker for bucket uploads. This library defines the pattern in its entirety, including the infrastructure shown in the initial diagram and the application code that supports the behavior.

Creating an upload handler becomes straightforward when leveraging this library:

// functions/upload.tsimport { api } from '@nitric/sdk'import blobProcessor from '../lib/blob-processor'const imageProcessor = blobProcessor('images')const uploadApi = api('uploads')uploadApi.put('/upload/image', imageProcessor.uploadFileMiddleware())// let this function handle the image upload triggersimageProcessor.startListener()

Similarly, adding a worker to react to these uploads is effortless:

// functions/process.tsimport blobProcessor from '../lib/blob-processor'imageProcessor.worker(async (file) => {// read the fileconst fileBytes = await file.read()// Do some work on the file})

This approach also scales efficiently, allowing the addition of services for file processing as simply as incorporating new files as shown above.

Finding harmonies between applications and infrastructure is vital to increasing agility in cloud software delivery. As we've explored, transforming cloud patterns into software, rather than treating them as separate entities, offers a powerful solution. By internalizing cloud primitives into the application layer, we not only simplify the deployment process but also amplify the reusability and scalability of our systems.

Frameworks like Nitric showcase the potential of this methodology, underscoring a future where cloud infrastructure is seamlessly integrated, code-driven, and more accessible to teams of all sizes. As we continue our cloud journeys, let's champion patterns that streamline complexities and empower developers to fully harness the cloud's potential.

If you'd like to read more you can see how Nitric stacks up against traditional IaC approaches in our FAQs.

Checkout the latest posts

Why I joined Nitric

Why I left the corporate world to join Nitric

Build Azure Infrastructure Using AI and Terraform

Learn how to leverage AI and Terraform to build and deploy Azure infrastructure directly from your application code with Nitric.

GenAI Made Terraform More Relevant Than Ever

Infrastructure as Code is more alive than ever, but it is no longer something teams should write line by line.

Get the most out of Nitric

Ship your first app faster with Next-gen infrastructure automation