At its core, cloud native is about leveraging the benefits of the cloud computing model to its fullest. This means building and running applications that take advantage of cloud-based infrastructures. The foundational principles that consistently rise to the forefront are:

- Scalability — Dynamically adjust resources based on demand.

- Resiliency — Design systems with failure in mind to ensure high availability.

- Flexibility — Decouple services and make them interoperable.

- Portability — Ensure applications can run on any cloud provider or even on premises.

In a previous article we highlighted the learning curve and situations where directly using Kubernetes might not be the best fit. Now I'll zero in on constructing scalable cloud native applications using managed services.

Managed Services: Your Elevator to the Cloud

Reaching the cloud might feel like constructing a ladder piece by piece using tools like Kubernetes. But what if we could simply press a button and ride smoothly upward? That’s where managed services come into play, acting as our elevator to the cloud. While it might not be obvious without deep diving into specific offerings, managed services often use Kubernetes behind the scenes to build scalable platforms for your applications.

There’s a clear connection between control and complexity when it comes to infrastructure (and software in general). We can begin to tear down the complexity by delegating some of the control to managed services from cloud providers like AWS, Azure or Google Cloud.

Managed services empower developers to concentrate on applications, relegating the concerns of infrastructure, scaling and server management to the capable hands of the cloud provider. The essence of this approach is crystallized in its core advantages: eliminating server management and letting the cloud provider handle dynamic scaling.

Think of managed services as an extension of your IT department, bearing the responsibility of ensuring infrastructure health, stability and scalability.

Choosing Your Provider

When designing a cloud native application, the primary focus should be on architectural principles, patterns and practices that enable flexibility, resilience and scalability. Instead of immediately selecting a specific cloud provider, it’s much more valuable for teams to start development without the blocker of this decision-making.

Luckily, the competitive nature of the cloud has driven cloud providers toward feature parity. Basically, they have established foundational building blocks which have taken inspiration greatly from each other and ultimately offer the same or extremely similar functionality and value to end users.

This paves the way for abstraction layers and frameworks like Nitric, which can be used to take advantage of these similarities to deliver cloud development patterns for application developers with greater flexibility. The true value here is the ability to make decisions about technologies like cloud providers on the timeline of the engineering team, not upfront as a blocker to starting development.

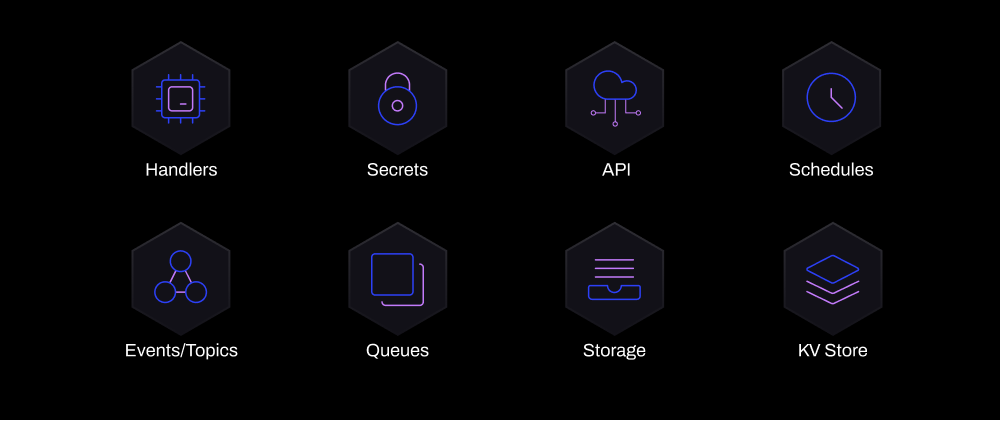

Resources that Scale by Default

The resource choices for apps set the trajectory for their growth; they shape the foundation upon which an application is built, influencing its scalability, security, flexibility and overall efficiency. Let’s categorize and examine some of the essential components that contribute to crafting a robust and secure application.

Execution, Processing and Interaction

- Handlers: Serve as entry points for executing code or processing events. They define the logic and actions performed when specific events or triggers occur.

- API gateway: Acts as a single entry point for managing and routing requests to various services. It provides features like rate limiting, authentication, logging and caching, offering a unified interface to your backend services or microservices.

- Schedules: Enable tasks or actions to be executed at predetermined times or intervals. Essential for automating repetitive or time-based workloads such as data backups or batch processing.

Communication and Event Management

- Events: Central to event-driven architectures, these represent occurrences or changes that can initiate actions or workflows. They facilitate asynchronous communication between systems or components.

- Queues: Offer reliable message-based communication between components, enhancing fault tolerance, scalability and decoupled, asynchronous communication.

Data Management and Storage

- Collections: Data structures, such as arrays, lists or sets that store and organize related data elements. They underpin many application scenarios by facilitating efficient data storage, retrieval and manipulation.

- Buckets: Containers in object storage systems like Amazon S3 or Google Cloud Storage. They provide scalable and reliable storage for diverse unstructured data types, from media files to documents.

Security and Confidentiality

- Secrets: Concerned with securely storing sensitive data like API keys or passwords. Using centralized secret management systems ensures these critical pieces of information are protected and accessible only to those who need them.

Automating Deployments

Traditional cloud providers have offered services for CI/CD but often fall short of delivering a truly seamless experience. Services like AWS CodePipeline or Azure DevOps require intricate setup and maintenance.

Why is this a problem?

- Time-consuming: Setting up and managing these pipelines takes away valuable developer time that could be better spent on feature development.

- Complexity: Each cloud provider’s CI/CD solution might have its quirks and learning curves, making it harder for teams to switch or maintain multicloud strategies.

- Error-prone: Manual steps or misconfigurations can lead to deployment failures or worse, downtime.

You might notice a few similarities here with some of the challenges of adopting K8s, albeit at a smaller scale. However, there are options that simplify the deployment process significantly, such as using an automated deployment engine.

Example: Simplified Process

This is the approach Nitric takes to streamline the deployment process:

The developer pushes code to the repository.

- Nitric’s engine detects the change, builds the necessary infrastructure specification and determines the minimal permissions, policies and resources required.

- The entire infrastructure needed for the app is automatically provisioned, without the developer explicitly defining it and without the need for a standalone Infrastructure as Code (IaC) project.

- Basically, the deployment engine intelligently deduces and sets up the required infrastructure for the application, ensuring roles and policies are configured for maximum security with minimal privileges.

This streamlined process relieves application and operations teams from activities like:

- The need to containerize images.

- Crafting, troubleshooting and sustaining IaC tools like Terraform.

- Managing discrepancies between application needs and existing infrastructure.

- Initiating temporary servers for prototypes or test phases.

Summary

Using managed services streamlines the complexities associated with infrastructure, allowing organizations to zero in on their primary goals: application development and business expansion. Managed services, serving as an integrated arm of the IT department, facilitate a smoother and more confident transition to the cloud than working directly with K8s. They’re a great choice for cloud native development to reinforce digital growth and stability.

With tools like Nitric streamlining the deployment processes and offering flexibility across different cloud providers, the move toward a cloud native environment without Kubernetes seems not only feasible but also compelling. If you’re on a journey to build a cloud native application or a platform for multiple applications, we’d love to hear from you.

Start by exploring the Nitric docs.

This article was originally published on The New Stack.